The Twitter timelines team had been looking for a faster implementation of gradient boosted decision trees (GBDT). Twitter Cortex provides DeepBird, which is an ML platform built around Torch. No GBDT solution was available in the Torch ecosystem, so we decided to build our own.

Today we are excited to announce the torch-decisiontree library, which is implemented in Lua and C using Torch’s fast and easy-to-use tensor library. In the tradition of the Torch community and Twitter, we are open-sourcing the library so that others may further benefit and contribute.

Example use case: applying decision forests to Tweets

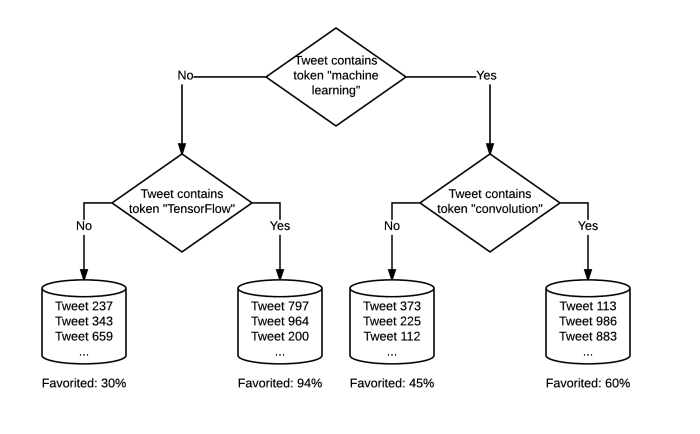

Torch-decisiontree provides the means to train GBDT and random forests. By organizing the data into a forest of trees, these techniques allow us to obtain richer features from data. For example, consider a dataset where each example is a Tweet represented as a bag-of-words. Furthermore, consider that we want the resulting trees to help predict if the tweet will be favorited. After training, each tree would map nodes to words in such a way that a Tweet’s nodes are easier to use to predict if the Tweet will be favorited or not.

Both approaches train decision forests, or ensembles of decision trees. Each decision tree is a classification and regression tree (CART). Each CART is trained on a subset of the available Tweets (that is, examples), and a subset of tokens or words present in the Tweet (that is, features of the example). The decision tree processes each example as a succession of decisions on individual features. Each Tweet thus ends up on a different leaf node of the tree. Each node looks at an individual feature.

Random forests and GBDT

Random forests and GBDT both use machine learning to train decision forests. Random forests train the decision trees independently. On the other hand, GBDT uses boosting to train a sequence of decision trees. Each successive decision tree boosts the performance of the previous trees. We recommend using GBDT for maximum performance.

Deep learning with a decision forest discretizer

Decision trees are often trained as classifiers. Deep learning typically provides better classification accuracy than decision trees. However, combining deep learning with decision forests has proven useful. Instead of using the decision forest as the final classifier, it is used to discretize a feature space. In practice, the decision nodes themselves are used as the output binary feature space.

For example, a Tweet is processed by each tree in the forest. The path of the nodes traversed by the process are taken as the TRUE features of that Tweet. All remaining non-traversed nodes are FALSE. The output feature space thus defines a new feature for each node in the forest (except the root nodes, which are always traversed). Passing an input through such a decision forest discretizer (DFD) generates an output that is easier for deep learning models to process.

Fast training and inference

Multi-threaded training is supported out of the box thanks to tight integration with torch-ipc. Random forests distribute the training of independent trees among threads. GBDT cannot use this distributed approach because the training of successive trees is dependent on the previous trees. Instead, GBDT parallelizes the training of each tree at the feature level. Each thread handles the splitting of a set of features.

Benchmarks for common use-cases have demonstrated a 2-5x speedup on training (for dense datasets) as compared to the popular xgboost implementation. The speed optimizations are due in great part to Conrado Miranda.

You can find all of Twitter’s Open Source projects on our Github page at https://twitter.github.io, and you can download Torch-decisiontree at https://github.com/twitter/torch-decisiontree. We're excited to continue contributing to the open source community and look forward to your feedback.

Did someone say … cookies?

X and its partners use cookies to provide you with a better, safer and

faster service and to support our business. Some cookies are necessary to use

our services, improve our services, and make sure they work properly.

Show more about your choices.