We believe that users must feel safe on Twitter in order to fully express themselves. As our General Counsel Vijaya Gadde explained last week in an opinion piece for the Washington Post, we need to ensure that voices are not silenced because people are afraid to speak up. To that end, we are today announcing our latest product and policy updates that will help us in continuing to develop a platform on which users can safely engage with the world at large.

First, we are making two policy changes, one related to prohibited content, and one about how we enforce certain policy violations. We are updating our violent threats policy so that the prohibition is not limited to “direct, specific threats of violence against others” but now extends to “threats of violence against others or promot[ing] violence against others.” Our previous policy was unduly narrow and limited our ability to act on certain kinds of threatening behavior. The updated language better describes the range of prohibited content and our intention to act when users step over the line into abuse.

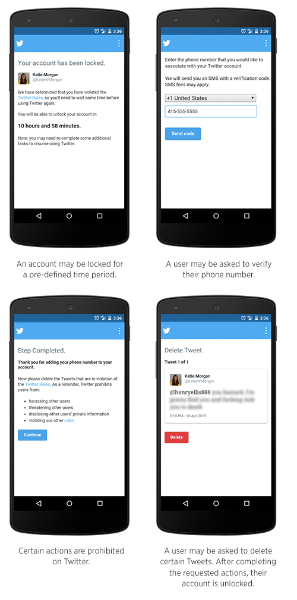

On the enforcement side, in addition to other actions we already take in response to abuse violations (such as requiring users to delete content or verify their phone number), we’re introducing an additional enforcement option that gives our support team the ability to lock abusive accounts for specific periods of time. This option gives us leverage in a variety of contexts, particularly where multiple users begin harassing a particular person or group of people.

Second, we have begun to test a product feature to help us identify suspected abusive Tweets and limit their reach. This feature takes into account a wide range of signals and context that frequently correlates with abuse including the age of the account itself, and the similarity of a Tweet to other content that our safety team has in the past independently determined to be abusive. It will not affect your ability to see content that you’ve explicitly sought out, such as Tweets from accounts you follow, but instead is designed to help us limit the potential harm of abusive content. This feature does not take into account whether the content posted or followed by a user is controversial or unpopular.

While dedicating more resources toward better responding to abuse reports is necessary and even critical, an equally important priority for us is identifying and limiting the incentives that enable and even encourage some users to engage in abuse. We’ll be monitoring how these changes discourage abuse and how they help ensure the overall health of a platform that encourages everyone’s participation. And as the ultimate goal is to ensure that Twitter is a safe place for the widest possible range of perspectives, we will continue to evaluate and update our approach in this critical arena.

Did someone say … cookies?

X and its partners use cookies to provide you with a better, safer and

faster service and to support our business. Some cookies are necessary to use

our services, improve our services, and make sure they work properly.

Show more about your choices.